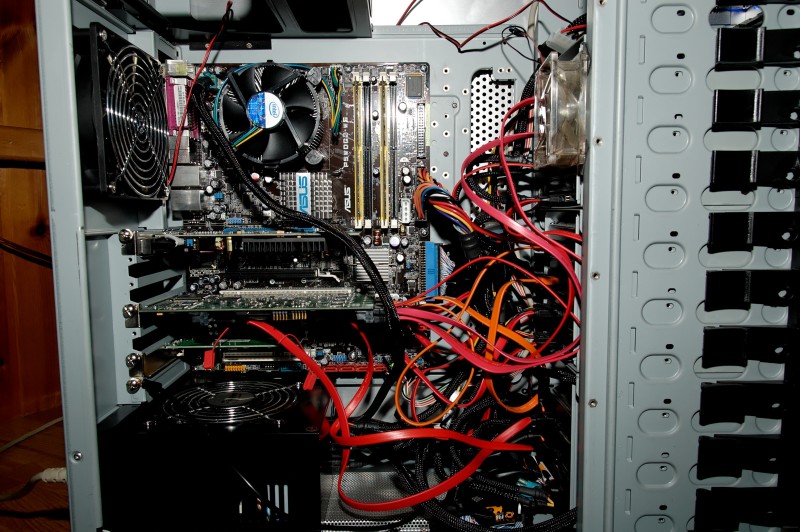

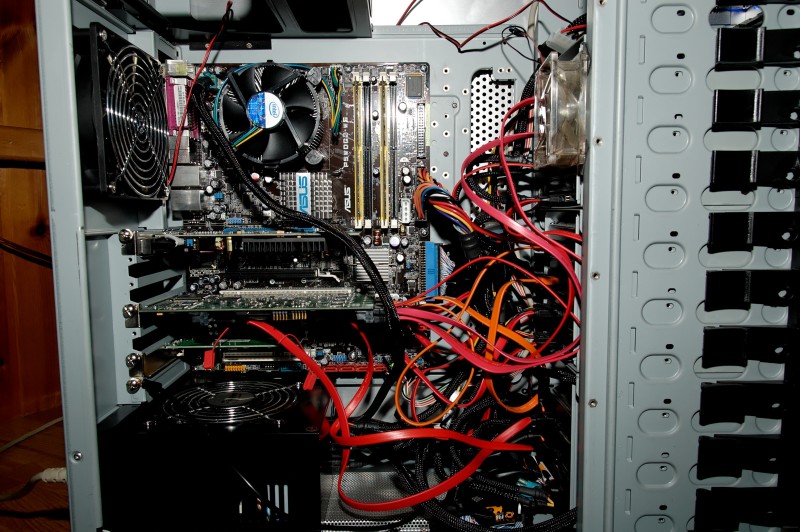

It is, however, not very easy to hide 8 sata cables coming from a pci card going to drives just a few inches away... I am gonna try to tidy it up abit more tho, not just for the sake of airflow (which is plenty good), but to make it look abit cleaner... And compared to my current workstation, its not messy at all

Was 3.7TB - then 2.7TB, now 19.1TB "quiet" server

Moderators: NeilBlanchard, Ralf Hutter, sthayashi, Lawrence Lee

Haha, you must be stoned, just wait till you see my P182 + Corsair 520W + P5W setup I'm gonna build in a few weeks

It is, however, not very easy to hide 8 sata cables coming from a pci card going to drives just a few inches away... I am gonna try to tidy it up abit more tho, not just for the sake of airflow (which is plenty good), but to make it look abit cleaner... And compared to my current workstation, its not messy at all

It is, however, not very easy to hide 8 sata cables coming from a pci card going to drives just a few inches away... I am gonna try to tidy it up abit more tho, not just for the sake of airflow (which is plenty good), but to make it look abit cleaner... And compared to my current workstation, its not messy at all

New stuff installed, I found out the P5WDG2-WS doesnt support conroe, so it had to be a 3.2GHz celeron instead...

New performance numbers:

New performance numbers:

Code: Select all

Version 1.03 ------Sequential Output------ --Sequential Input- --Random-

-Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

Oberon 4G 98310 15 44507 8 299311 20 58.3 0

-

jaldridge6

- Posts: 319

- Joined: Wed May 09, 2007 2:31 am

- Location: Hell

-

jaldridge6

- Posts: 319

- Joined: Wed May 09, 2007 2:31 am

- Location: Hell

-

Konnetikut

- Posts: 153

- Joined: Sat Jan 13, 2007 10:14 pm

- Location: Vancouver

- Contact:

I feel your pain on the cabling...

I have a RAID6 box that would probably give some of the minimalist zealots here apoplexy.

Aside from using a controller with a proprietary multiplexing connector, making the cables neater is just going to put stress on the connectors, and won't really help airflow that much. Some times you just need a hurkin' ugly box to get the job done. If what you have isn't cutting it thermally, then worry about airflow- not everything can look and sound like an HTPC.

Aside from using a controller with a proprietary multiplexing connector, making the cables neater is just going to put stress on the connectors, and won't really help airflow that much. Some times you just need a hurkin' ugly box to get the job done. If what you have isn't cutting it thermally, then worry about airflow- not everything can look and sound like an HTPC.

I envy your 9650

I have to agree on the minimalist stuff, its mostly impossible to get a box like that looking as clean as a P182 with one harddrive... especially since the Stacker doesnt offer any places to hide cables - at all.

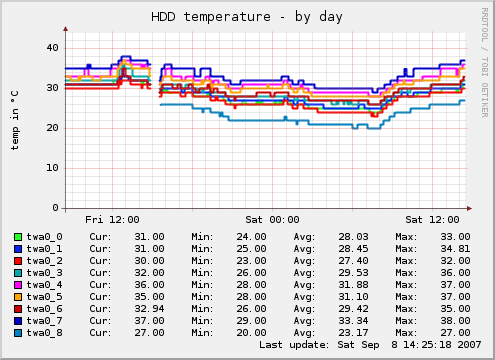

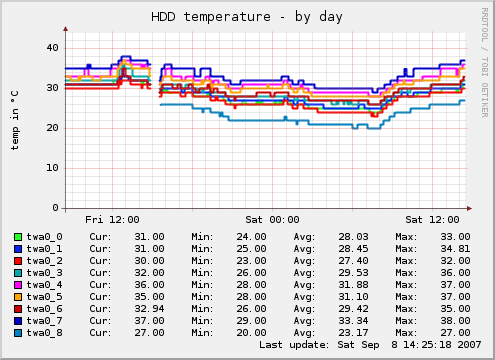

Works pretty good, but with new fans the temps are abit higher, albeit i almost dont hear it now

(ambient temp is 25-26C)

Twa0_8 is the drive i added yesterday, in the bottom cooler where there's only two drives.. the 500GB and a 30GB WD

I have to agree on the minimalist stuff, its mostly impossible to get a box like that looking as clean as a P182 with one harddrive... especially since the Stacker doesnt offer any places to hide cables - at all.

Works pretty good, but with new fans the temps are abit higher, albeit i almost dont hear it now

(ambient temp is 25-26C)

Twa0_8 is the drive i added yesterday, in the bottom cooler where there's only two drives.. the 500GB and a 30GB WD

Software RAID is really never the weak link. The BUS is almost ALWAYS the weak link. The problem is most/all cheap SATA controllers use the PCI bus. As long as the drives have enough bandwidth there isn't any problems with software raid:

bexamous@bex:~$ cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdd1[0] sdo1[5] sdf1[4] sde1[3] sdc1[2] sdb1[1]

2433846400 blocks level 5, 128k chunk, algorithm 2 [6/6] [UUUUUU]

md1 : active raid6 sdg1[0] sdn1[7] sdm1[6] sdl1[5] sdk1[4] sdj1[3] sdi1[2] sdh1[1]

1464162816 blocks level 6, 128k chunk, algorithm 2 [8/8] [UUUUUUUU]

unused devices: <none>

bexamous@bex:~$ sudo time dd if=/dev/md0 of=/dev/null bs=1M count=10000

10000+0 records in

10000+0 records out

10485760000 bytes (10 GB) copied, 29.625 seconds, 354 MB/s

0.02user 9.54system 0:29.66elapsed 32%CPU (0avgtext+0avgdata 0maxresident)k

0inputs+0outputs (1major+546minor)pagefaults 0swaps

bexamous@bex:~$ sudo time dd if=/dev/md1 of=/dev/null bs=1M count=10000

10000+0 records in

10000+0 records out

10485760000 bytes (10 GB) copied, 25.3165 seconds, 414 MB/s

0.02user 9.47system 0:25.37elapsed 37%CPU (0avgtext+0avgdata 0maxresident)k

0inputs+0outputs (2major+545minor)pagefaults 0swaps

bexamous@bex:~$

This 8x250GB Samsung drives in RAID6 and 6x500GB Samsung drives in RAID5.

bexamous@bex:~$ cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdd1[0] sdo1[5] sdf1[4] sde1[3] sdc1[2] sdb1[1]

2433846400 blocks level 5, 128k chunk, algorithm 2 [6/6] [UUUUUU]

md1 : active raid6 sdg1[0] sdn1[7] sdm1[6] sdl1[5] sdk1[4] sdj1[3] sdi1[2] sdh1[1]

1464162816 blocks level 6, 128k chunk, algorithm 2 [8/8] [UUUUUUUU]

unused devices: <none>

bexamous@bex:~$ sudo time dd if=/dev/md0 of=/dev/null bs=1M count=10000

10000+0 records in

10000+0 records out

10485760000 bytes (10 GB) copied, 29.625 seconds, 354 MB/s

0.02user 9.54system 0:29.66elapsed 32%CPU (0avgtext+0avgdata 0maxresident)k

0inputs+0outputs (1major+546minor)pagefaults 0swaps

bexamous@bex:~$ sudo time dd if=/dev/md1 of=/dev/null bs=1M count=10000

10000+0 records in

10000+0 records out

10485760000 bytes (10 GB) copied, 25.3165 seconds, 414 MB/s

0.02user 9.47system 0:25.37elapsed 37%CPU (0avgtext+0avgdata 0maxresident)k

0inputs+0outputs (2major+545minor)pagefaults 0swaps

bexamous@bex:~$

This 8x250GB Samsung drives in RAID6 and 6x500GB Samsung drives in RAID5.

Code: Select all

$ dd if=large hdtv file.mkv of=/dev/null

16529066+1 records in

16529066+1 records out

8462882179 bytes (8.5 GB) copied, 26.9014 seconds, 315 MB/s

Code: Select all

Version 1.03 ------Sequential Output------ --Sequential Input- --Random-

-Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

Oberon 4G 98310 15 44507 8 299311 20 58.3 0speaking about file servers, here's mine (CM GeminiII came later with one 1200rpm Noctua on it):

it's C2D E6550 with 8GB of RAM. 8 x WD7500AAKS, some partitions in linux kernel raid. here's the example of bonnie++ on raid5 (have no raid0 to show off):

xfs used for massive caching. small files performance would be much better with reiserfs...

it's C2D E6550 with 8GB of RAM. 8 x WD7500AAKS, some partitions in linux kernel raid. here's the example of bonnie++ on raid5 (have no raid0 to show off):

Code: Select all

Version 1.93c ------Sequential Output------ --Sequential Input- --Random-

Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

titan-raid5 16G 1001 94 176941 27 100796 16 1862 98 330395 27 312.1 5

Latency 10488us 474ms 205ms 26093us 74405us 173ms

Version 1.93c ------Sequential Create------ --------Random Create--------

titan-raid5 -Create-- --Read--- -Delete-- -Create-- --Read--- -Delete--

files /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP

64 981 3 +++++ +++ 967 1 943 3 +++++ +++ 875 3

Latency 495ms 82us 246ms 437ms 61us 492ms-

matt_garman

- *Lifetime Patron*

- Posts: 541

- Joined: Sun Jan 04, 2004 11:35 am

- Location: Chicago, Ill., USA

- Contact:

What are you drive temps?trxman wrote:speaking about file servers, here's mine (CM GeminiII came later with one 1200rpm Noctua on it):

Image Link

It's C2D E6550 with 8GB of RAM. 8 x WD7500AAKS, some partitions in linux kernel raid. here's the example of bonnie++ on raid5 (have no raid0 to show off):

xfs used for massive caching. small files performance would be much better with reiserfs...Code: Select all

Version 1.93c ------Sequential Output------ --Sequential Input- --Random- Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks-- Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP titan-raid5 16G 1001 94 176941 27 100796 16 1862 98 330395 27 312.1 5 Latency 10488us 474ms 205ms 26093us 74405us 173ms Version 1.93c ------Sequential Create------ --------Random Create-------- titan-raid5 -Create-- --Read--- -Delete-- -Create-- --Read--- -Delete-- files /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP 64 981 3 +++++ +++ 967 1 943 3 +++++ +++ 875 3 Latency 495ms 82us 246ms 437ms 61us 492ms

I have this alias set up:

Code: Select all

alias hddtemps='for i in /dev/sd? ; do sudo smartctl -d ata -a $i | grep -i tempera ; done'A long night of benchmarking and tweaking later, and I can proudly present the following numbers:

bonnie++:

IOzone:

This is with 9x500Gb in raid5, a total of 3.64TB

Edit: Also, I managed 97MB/s from my ws to fileserver via gigabit, copying from two drives, and copying from the fileserver to -three- drives in my ws I managed to hit 106MB/s.. not too shoddy for a samba share and home gigabit equipment.

bonnie++:

Code: Select all

Version 1.03 ------Sequential Output------ --Sequential Input- --Random-

-Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

Oberon 4G 117897 19 43460 8 362375 23 62.7 0IOzone:

Code: Select all

" Initial write " 130368.55

" Rewrite " 77878.62

" Read " 311715.19

" Re-read " 315826.75

Edit: Also, I managed 97MB/s from my ws to fileserver via gigabit, copying from two drives, and copying from the fileserver to -three- drives in my ws I managed to hit 106MB/s.. not too shoddy for a samba share and home gigabit equipment.

bravo!

I've never managed to get such high performance from a samba share, and it's not about the raid array:

nfs and especially ftp had good results, but samba did not no matter what I've tried. all I've ever got from samba is ~30-40MB/s.

if you find some time, you could post your samba server/client confs...

I've never managed to get such high performance from a samba share, and it's not about the raid array:

Code: Select all

Version 1.93c ------Sequential Output------ --Sequential Input- --Random-

Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

titan-tnt-raid5 16G 1001 94 176941 27 100796 16 1862 98 330395 27 312.1 5

Latency 10488us 474ms 205ms 26093us 74405us 173ms

Version 1.93c ------Sequential Create------ --------Random Create--------

titan-tnt-raid5 -Create-- --Read--- -Delete-- -Create-- --Read--- -Delete--

files /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP

64 981 3 +++++ +++ 967 1 943 3 +++++ +++ 875 3

Latency 495ms 82us 246ms 437ms 61us 492msif you find some time, you could post your samba server/client confs...

You might want to tweak the network settings aswell...

Code: Select all

# cat speed.sh

#!/bin/bash

#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#

# This script might improve your bandwidth utilization.

# It tweaks buffer memory usage.

# It doesn't harm your NIC directly in any way.

# I got this from a friend who got it from a friend...

# It has been tested on four computers, all connected to

# 100mbit connections. The utilization reached close to 100%.

#

# The script will make a backup of your original settings,

# called speed_backup.sh. Run it to restore original values.

#

# Use it at your own risk.

#

# /Zio

#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#-#

# Create backup.

if [ ! -e ./speed_backup.sh ] ; then

echo -n "Creating backup (`pwd`/speed_backup.sh)... "

echo "#!/bin/bash" > ./speed_backup.sh

echo "" >> ./speed_backup.sh

echo "echo \"`cat /proc/sys/net/ipv4/tcp_sack`\" > /proc/sys/net/ipv4/tcp_sack" >> ./speed_backup.sh

echo "echo \"`cat /proc/sys/net/ipv4/tcp_timestamps`\" > /proc/sys/net/ipv4/tcp_timestamps" >> ./speed_backup.sh

echo "echo \"`cat /proc/sys/net/ipv4/tcp_mem`\" > /proc/sys/net/ipv4/tcp_mem" >> ./speed_backup.sh

echo "echo \"`cat /proc/sys/net/ipv4/tcp_rmem`\" > /proc/sys/net/ipv4/tcp_rmem" >> ./speed_backup.sh

echo "echo \"`cat /proc/sys/net/ipv4/tcp_wmem`\" > /proc/sys/net/ipv4/tcp_wmem" >> ./speed_backup.sh

echo "echo \"`cat /proc/sys/net/core/optmem_max`\" > /proc/sys/net/core/optmem_max" >> ./speed_backup.sh

echo "echo \"`cat /proc/sys/net/core/rmem_default`\" > /proc/sys/net/core/rmem_default" >> ./speed_backup.sh

echo "echo \"`cat /proc/sys/net/core/rmem_max`\" > /proc/sys/net/core/rmem_max" >> ./speed_backup.sh

echo "echo \"`cat /proc/sys/net/core/wmem_default`\" > /proc/sys/net/core/wmem_default" >> ./speed_backup.sh

echo "echo \"`cat /proc/sys/net/core/wmem_max`\" > /proc/sys/net/core/wmem_max" >> ./speed_backup.sh

chmod 744 ./speed_backup.sh

echo -e "\tdone!"

else

echo "Backup found (`pwd`/speed_backup.sh). Skipping creation of one."

fi

# Boost buffer settings

echo -n "Boosting... "

echo "0" > /proc/sys/net/ipv4/tcp_sack

echo "0" > /proc/sys/net/ipv4/tcp_timestamps

echo "31293440 31375360 31457280" > /proc/sys/net/ipv4/tcp_mem

echo "655360 13980800 27961600" > /proc/sys/net/ipv4/tcp_rmem

echo "655360 13980800 27961600" > /proc/sys/net/ipv4/tcp_wmem

echo "1638400" > /proc/sys/net/core/optmem_max

echo "10485600" > /proc/sys/net/core/rmem_default

echo "20971360" > /proc/sys/net/core/rmem_max

echo "10485600" > /proc/sys/net/core/wmem_default

echo "20971360" > /proc/sys/net/core/wmem_max

echo -e "\tdone!"

-

matt_garman

- *Lifetime Patron*

- Posts: 541

- Joined: Sun Jan 04, 2004 11:35 am

- Location: Chicago, Ill., USA

- Contact:

What kind of tweaking did you do?Wibla wrote:A long night of benchmarking and tweaking later, and I can proudly present the following numbers:

...

That's an old version of bonnie, by the way.

Mine:

Code: Select all

Version 1.93c ------Sequential Output------ --Sequential Input- --Random-

Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

septictank 4G 477 97 108182 30 57677 19 811 98 252520 55 387.8 8

Latency 17630us 1049ms 77018us 40516us 137ms 343ms

Version 1.93c ------Sequential Create------ --------Random Create--------

septictank -Create-- --Read--- -Delete-- -Create-- --Read--- -Delete--

files /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP

16 2062 19 +++++ +++ 2083 17 2192 20 +++++ +++ 1958 18

Latency 161ms 82us 64501us 95779us 25us 283ms

Speaking of big file servers, I received my hec RA466A00 4U Server Case last night, with which I intend to install several Supermicro CSE-MT35 hot swap enclosures. Even though the case is listed as a rack mount, the side handles come off (via screws) and it has screw holes for casters or feet. I.e., it can easily be converted to a tower. The front door also looks removable via screws. I only looked it over a bit last night, but my first impression was quite positive. Hopefully I'll get a chance to post lots of pics and maybe even a build log (but don't hold me to that!

-

Nick Geraedts

- SPCR Reviewer

- Posts: 561

- Joined: Tue May 30, 2006 8:22 pm

- Location: Vancouver, BC

I beg to differ... as I usually do with Windows/Linux debates. See for yourself what Windows file sharing can accomplish.trxman wrote:thx for the script. I've run it. nfs and ftp use almost 100% of 1Gbps, but lazzy samba tops at 30MB/s. never mind: those who want performance will have to install linux

(all transfers are done from RAM to RAM - no hard drives nor raid arrays were used)

This transfer took the 1.8GB installer of the Crysis Demo from my RAID0 array in my workstation to the RAID5 array in my fileserver (Mounted as a network share on my workstation). I'm using D-Link DGS-1008D gigabit switch, and the Marvel PCIe network card on the P5B-Deluxe motherboard in both systems. Task Manager showed that network usage was around 95% during the entire transfer.

The linux implementation of samba is what sucks here. All modern network filesystems are never the limitation in this case. If anything FTP should give the worst overall performance for small files, due to the incredibly large overhead with a single file transfer.